The Query Observability Of StarRocks

作者: 康凯森

日期: 2023-02-05

分类: OLAP

- 1 StarRocks Explain + SQL

- 2 StarRocks SQL Optimizer Trace performance

- 3 StarRocks SQL Optimizer Detail Trace Log

- 4 StarRocks Execution Trace visualization

- 5 StarRocks Query Profile

- 6 StarRocks Query Dump

- 7 StarRocks SQL Audit Log

- 8 StarRocks Runtime Query metrics

- 9 StarRocks Big Query

- 10 StarRocks information schema table

- 11 StarRocks 统计信息表

- 12 FE 动态执行脚本

- 13 BE 动态执行脚本

过去两年,我们开发了很多查询层的分析,诊断工具,加强了 StarRocks 查询层的可观测性,今天给大家简单介绍下,之后我们还会在可观测性方面继续加强。

1 StarRocks Explain + SQL

参考 https://docs.starrocks.io/en-us/latest/administration/Query_planning#plan-analysis 可以看到每个 SQL 的执行计划

explain SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127 WHE

RE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2;

1.1 StarRocks Explain logical + SQL

相比 Explain + SQL,信息更少,可以更方便地看到整个执行计划

mysql> Explain logical SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127 WHERE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2;

+-----------------------------------------------------------------------------------------------------------------------+

| Explain String |

+-----------------------------------------------------------------------------------------------------------------------+

| - Output => [1:c_2_0] |

| - HASH/INNER JOIN [61:cast = 60:cast] => [1:c_2_0] |

| Estimates: {row: 1, cpu: 6.00, memory: 2.00, network: 0.00, cost: 911.02} |

| - EXCHANGE(SHUFFLE) [61] |

| Estimates: {row: 1, cpu: 4.00, memory: 0.00, network: 0.00, cost: 902.50} |

| - NESTLOOP/CROSS JOIN => [61:cast] |

| Estimates: {row: 1, cpu: 8.00, memory: 200.00, network: 0.00, cost: 900.50} |

| 61:cast := cast(10:c_0_2 as datetime) |

| - SCAN [t0] => [10:c_0_2] |

| Estimates: {row: 1, cpu: 84.00, memory: 0.00, network: 0.00, cost: 42.00} |

| partitionRatio: 1/5, tabletRatio: 3/3 |

| predicate: cast(10:c_0_2 as datetime) IS NOT NULL |

| - EXCHANGE(BROADCAST) |

| Estimates: {row: 1, cpu: 1.00, memory: 1.00, network: 1.00, cost: 454.50} |

| - LIMIT [1] |

| - EXCHANGE(GATHER) |

| Estimates: {row: 1, cpu: 1.00, memory: 0.00, network: 1.00, cost: 450.50} |

| - NESTLOOP/CROSS JOIN => [65:auto_fill_col] |

| Estimates: {row: 1, cpu: 2.00, memory: 200.00, network: 0.00, cost: 448.50} |

| 65:auto_fill_col := 1 |

| limit: 1 |

| - SCAN [t1] => [67:auto_fill_col] |

| Estimates: {row: 1, cpu: 2.00, memory: 0.00, network: 0.00, cost: 1.00} |

| partitionRatio: 1/9, tabletRatio: 3/3 |

| 67:auto_fill_col := 1 |

| limit: 1 |

| - EXCHANGE(BROADCAST) |

| Estimates: {row: 1, cpu: 1.00, memory: 1.00, network: 1.00, cost: 46.50} |

| - SCAN [t0] => [66:auto_fill_col] |

| Estimates: {row: 1, cpu: 85.00, memory: 0.00, network: 0.00, cost: 42.50} |

| partitionRatio: 1/5, tabletRatio: 3/3 |

| 66:auto_fill_col := 1 |

| - EXCHANGE(SHUFFLE) [60] |

| Estimates: {row: 1, cpu: ?, memory: ?, network: ?, cost: 2.5} |

| - SCAN [t2] => [1:c_2_0, 60:cast] |

| Estimates: {row: 1, cpu: ?, memory: ?, network: ?, cost: 1.5} |

| partitionRatio: 0/46, tabletRatio: 0/0 |

| 60:cast := cast(6:c_2_5 as datetime) |

| predicate: cast(6:c_2_5 as datetime) IS NOT NULL |

+-----------------------------------------------------------------------------------------------------------------------+

39 rows in set (0.04 sec)

1.2 Starrocks Explain Cost + SQL

相比 Explain + SQL,信息更多,有了列的统计信息,列的类型,Nullable 等信息

mysql> Explain costs SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127

WHERE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2;

+--------------------------------------------------------------------------------------+

| Explain String |

+--------------------------------------------------------------------------------------+

| PLAN FRAGMENT 0(F10) |

| Output Exprs:1: c_2_0 |

| Input Partition: UNPARTITIONED |

| RESULT SINK |

| |

| 19:EXCHANGE |

| cardinality: 1 |

| |

| PLAN FRAGMENT 1(F09) |

| |

| Input Partition: HASH_PARTITIONED: 61: cast |

| OutPut Partition: UNPARTITIONED |

| OutPut Exchange Id: 19 |

| |

| 18:Project |

| | output columns: |

| | 1 <-> [1: c_2_0, DATE, true] |

| | cardinality: 1 |

| | column statistics: |

| | * c_2_0-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| | |

| 17:HASH JOIN |

| | join op: INNER JOIN (PARTITIONED) |

| | equal join conjunct: [61: cast, DATETIME, true] = [60: cast, DATETIME, true] |

| | output columns: 1 |

| | cardinality: 1 |

| | column statistics: |

| | * c_2_0-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| | * cast-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| | * cast-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| | |

| |----16:EXCHANGE |

| | distribution type: SHUFFLE |

| | partition exprs: [60: cast, DATETIME, true] |

| | cardinality: 1 |

| | |

| 13:EXCHANGE |

| distribution type: SHUFFLE |

| partition exprs: [61: cast, DATETIME, true] |

| cardinality: 1 |

| |

| PLAN FRAGMENT 2(F07) |

| |

| Input Partition: RANDOM |

| OutPut Partition: HASH_PARTITIONED: 60: cast |

| OutPut Exchange Id: 16 |

| |

| 15:Project |

| | output columns: |

| | 1 <-> [1: c_2_0, DATE, true] |

| | 60 <-> cast([6: c_2_5, VARCHAR, true] as DATETIME) |

| | cardinality: 1 |

| | column statistics: |

| | * c_2_0-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| | * cast-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| | |

| 14:OlapScanNode |

| table: t2, rollup: t2 |

| preAggregation: off. Reason: Has can not pre-aggregation Join |

| Predicates: CAST(6: c_2_5 AS DATETIME) IS NOT NULL |

| partitionsRatio=0/46, tabletsRatio=0/0 |

| tabletList= |

| actualRows=0, avgRowSize=3.0 |

| cardinality: 1 |

| column statistics: |

| * c_2_0-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_2_5-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * cast-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| |

| PLAN FRAGMENT 3(F00) |

| |

| Input Partition: RANDOM |

| OutPut Partition: HASH_PARTITIONED: 61: cast |

| OutPut Exchange Id: 13 |

| |

| 12:Project |

| | output columns: |

| | 61 <-> cast([10: c_0_2, DATE, false] as DATETIME) |

| | cardinality: 1 |

| | column statistics: |

| | * cast-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| | |

| 11:NESTLOOP JOIN |

| | join op: CROSS JOIN |

| | cardinality: 1 |

| | column statistics: |

| | * c_0_2-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| | * cast-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | |

| |----10:EXCHANGE |

| | distribution type: BROADCAST |

| | limit: 1 |

| | cardinality: 1 |

| | |

| 1:Project |

| | output columns: |

| | 10 <-> [10: c_0_2, DATE, false] |

| | cardinality: 1 |

| | column statistics: |

| | * c_0_2-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| | |

| 0:OlapScanNode |

| table: t0, rollup: t0 |

| preAggregation: off. Reason: Has can not pre-aggregation Join |

| Predicates: CAST(10: c_0_2 AS DATETIME) IS NOT NULL |

| partitionsRatio=1/5, tabletsRatio=3/3 |

| tabletList=14013,14015,14017 |

| actualRows=1, avgRowSize=84.0 |

| cardinality: 1 |

| column statistics: |

| * c_0_0-->[1.2625344E9, 1.2625344E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_1-->[4.031006053147651E20, 4.031006053147651E20, 0.0, 16.0, 1.0] ESTIMATE |

| * c_0_2-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_3-->[1.262822414E9, 1.262822414E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_4-->[1.2633984E9, 1.2633984E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_5-->[1.263081614E9, 1.263081614E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_6-->[9.175465853287E12, 9.175465853287E12, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_7-->[-Infinity, Infinity, 1.0, 8.0, 0.0] ESTIMATE |

| * c_0_8-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_9-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_11-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_12-->[-2.147055719E9, -2.147055719E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_13-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_14-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_15-->[10.0, 10.0, 0.0, 2.0, 1.0] ESTIMATE |

| * c_0_16-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_17-->[-1.037257802E9, -1.037257802E9, 0.0, 8.0, 1.0] ESTIMATE |

| |

| PLAN FRAGMENT 4(F04) |

| |

| Input Partition: UNPARTITIONED |

| OutPut Partition: UNPARTITIONED |

| OutPut Exchange Id: 10 |

| |

| 9:EXCHANGE |

| distribution type: GATHER |

| limit: 1 |

| cardinality: 1 |

| |

| PLAN FRAGMENT 5(F01) |

| |

| Input Partition: RANDOM |

| OutPut Partition: UNPARTITIONED |

| OutPut Exchange Id: 09 |

| |

| 8:Project |

| | output columns: |

| | 65 <-> 1 |

| | limit: 1 |

| | cardinality: 1 |

| | column statistics: |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | |

| 7:NESTLOOP JOIN |

| | join op: CROSS JOIN |

| | limit: 1 |

| | cardinality: 1 |

| | column statistics: |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | |

| |----6:EXCHANGE |

| | distribution type: BROADCAST |

| | cardinality: 1 |

| | |

| 3:Project |

| | output columns: |

| | 67 <-> 1 |

| | limit: 1 |

| | cardinality: 1 |

| | column statistics: |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | |

| 2:OlapScanNode |

| table: t1, rollup: t1 |

| preAggregation: on |

| partitionsRatio=1/9, tabletsRatio=3/3 |

| tabletList=14054,14056,14058 |

| actualRows=1, avgRowSize=2.0 |

| limit: 1 |

| cardinality: 1 |

| column statistics: |

| * c_1_7-->[0.0, 0.0, 0.0, 1.0, 1.0] ESTIMATE |

| * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| |

| PLAN FRAGMENT 6(F02) |

| |

| Input Partition: RANDOM |

| OutPut Partition: UNPARTITIONED |

| OutPut Exchange Id: 06 |

| |

| 5:Project |

| | output columns: |

| | 66 <-> 1 |

| | cardinality: 1 |

| | column statistics: |

| | * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

| | |

| 4:OlapScanNode |

| table: t0, rollup: t0 |

| preAggregation: off. Reason: Has can not pre-aggregation Join |

| partitionsRatio=1/5, tabletsRatio=3/3 |

| tabletList=14013,14015,14017 |

| actualRows=1, avgRowSize=85.0 |

| cardinality: 1 |

| column statistics: |

| * c_0_0-->[1.2625344E9, 1.2625344E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_1-->[4.031006053147651E20, 4.031006053147651E20, 0.0, 16.0, 1.0] ESTIMATE |

| * c_0_2-->[1.2638304E9, 1.2638304E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_3-->[1.262822414E9, 1.262822414E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_4-->[1.2633984E9, 1.2633984E9, 0.0, 4.0, 1.0] ESTIMATE |

| * c_0_5-->[1.263081614E9, 1.263081614E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_6-->[9.175465853287E12, 9.175465853287E12, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_7-->[-Infinity, Infinity, 1.0, 8.0, 0.0] ESTIMATE |

| * c_0_8-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_9-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_11-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_12-->[-2.147055719E9, -2.147055719E9, 0.0, 8.0, 1.0] ESTIMATE |

| * c_0_13-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_14-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_15-->[10.0, 10.0, 0.0, 2.0, 1.0] ESTIMATE |

| * c_0_16-->[-Infinity, Infinity, 0.0, 1.0, 1.0] UNKNOWN |

| * c_0_17-->[-1.037257802E9, -1.037257802E9, 0.0, 8.0, 1.0] ESTIMATE |

| * auto_fill_col-->[1.0, 1.0, 0.0, 1.0, 1.0] ESTIMATE |

+--------------------------------------------------------------------------------------+

226 rows in set (0.04 sec)

2 StarRocks SQL Optimizer Trace performance

功能: 可以详细看到 SQL Optimize 阶段每一步的具体函数,可以用来做 Optimizer 的性能分析

mysql> TRACE OPTIMIZER SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127 WHERE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2;

+-------------------------------------------------------------------------------------+

| Explain String |

+-------------------------------------------------------------------------------------+

| 4229908ms|-- Total[1] 48ms |

| 4229908ms| -- Analyzer[1] 33ms |

| 4229942ms| -- Transformer[1] 1ms |

| 4229943ms| -- Optimizer[1] 12ms |

| 4229943ms| -- Optimizer.RuleBaseOptimize[1] 3ms |

| 4229943ms| -- Optimizer.RuleBaseOptimize.RewriteTreeTask[44] 2ms |

| 4229947ms| -- Optimizer.CostBaseOptimize[1] 8ms |

| 4229947ms| -- Optimizer.CostBaseOptimize.OptimizeGroupTask[16] 0ms |

| 4229947ms| -- Optimizer.CostBaseOptimize.OptimizeExpressionTask[40] 0ms |

| 4229947ms| -- Optimizer.CostBaseOptimize.ExploreGroupTask[48] 0ms |

| 4229947ms| -- Optimizer.CostBaseOptimize.DeriveStatsTask[40] 1ms |

| 4229947ms| -- Optimizer.CostBaseOptimize.ApplyRuleTask[61] 0ms |

| 4229948ms| -- Optimizer.CostBaseOptimize.EnforceAndCostTask[38] 5ms |

| 4229955ms| -- Optimizer.PhysicalRewrite[1] 0ms |

| 4229955ms| -- ExecPlanBuild[1] 1ms |

+-------------------------------------------------------------------------------------+

15 rows in set (0.05 sec)

3 StarRocks SQL Optimizer Detail Trace Log

功能: 可以详细看到 一个 SQL 在 Parse, Analyze, Optimize 每个阶段的详细变化,深入理解 Starrocks 优化器的执行过程

用法: set enable_optimizer_trace_log first, then query the sql, and

set enable_optimizer_trace_log = true;

Parsed Tree

[OptimizerTraceUtil.log():136] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b45e6] after parse:

QueryStatement{

queryRelation=SelectRelation{

selectList=com.starrocks.sql.ast.SelectList@373a715b

fromRelation=JoinRelation{joinType=INNER JOIN, left=t2, right=SubqueryRelation{

alias=subv0

query=QueryStatement{

queryRelation=SelectRelation{

selectList=com.starrocks.sql.ast.SelectList@74cb51d5

fromRelation=v0

predicate=EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125 , v0 AS v0_127 )

groupByClause=null

having=null

sortClause=[]

limit=null

}

}

}, onPredicate=BinaryPredicate{id=null, type=INVALID_TYPE, sel=-1.0, #distinct=-1, scale=-1}}

predicate=null

groupByClause=null

having=null

sortClause=[]

limit=null

}

}

AST Tree

[OptimizerTraceUtil.log():136] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b45e6] after analyze:

QueryStatement{

queryRelation=SelectRelation{

selectList=com.starrocks.sql.ast.SelectList@373a715b

fromRelation=JoinRelation{joinType=INNER JOIN, left=test.t2, right=SubqueryRelation{

alias=subv0

query=QueryStatement{

queryRelation=SelectRelation{

selectList=com.starrocks.sql.ast.SelectList@74cb51d5

fromRelation=null

predicate=EXISTS (SELECT test.v0_127.c_0_7 FROM test.t1 AS t1_125 , `test`.`v0` AS v0_127 )

groupByClause=null

having=null

sortClause=[]

limit=null

}

}

}, onPredicate=BinaryPredicate{id=null, type=BOOLEAN, sel=-1.0, #distinct=-1, scale=-1}}

predicate=null

groupByClause=null

having=null

sortClause=[]

limit=null

}

}

Init logicOperatorTree:

[OptimizerTraceUtil.log():136] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b

45e6] origin logicOperatorTree:

LogicalProjectOperator {projection=[1: c_2_0]}

-> LogicalProjectOperator {projection=[1: c_2_0, 2: c_2_1, 3: c_2_2, 4: c_2_3, 5: c_2_4, 6: c_2_5, 7: c_2_6, 10: c_0_2, 15: c_0_7]}

-> LOGICAL_JOIN {INNER JOIN, onPredicate = cast(6: c_2_5 as datetime) = cast(10: c_0_2 as datetime) , Predicate = null}

-> LogicalProjectOperator {projection=[1: c_2_0, 2: c_2_1, 3: c_2_2, 4: c_2_3, 5: c_2_4, 6: c_2_5, 7: c_2_6]}

-> LogicalOlapScanOperator {table=14154, selectedPartitionId=null, outputColumns=[1: c_2_0, 2: c_2_1, 3: c_2_2, 4: c_2_3, 5:

c_2_4, 6: c_2_5, 7: c_2_6], predicate=null, limit=-1}

-> LogicalProjectOperator {projection=[10: c_0_2, 15: c_0_7]}

-> LOGICAL_APPLY

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0

_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalAggregation {type=GLOBAL ,aggregations={} ,groupKeys=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4,

13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]

}

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6,

15: c_0_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalFilterOperator {predicate=true}

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14

: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 18: c_0_10, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalOlapScanOperator {table=14011, selectedPartitionId=null, outputColumns=[8: c_0_0, 9: c_0_1,

10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 18: c_0_10, 19: c_0_11, 20: c_0_12, 21: c_0_13, 2

2: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17], predicate=null, limit=-1}

-> LogicalProjectOperator {projection=[48: c_0_7]}

-> LogicalProjectOperator {projection=[33: c_1_7, 34: c_1_8, 35: c_1_9, 36: c_1_10, 37: c_1_11, 38: c_1_12, 39: c_1_1

3, 40: c_1_14, 41: c_0_0, 42: c_0_1, 43: c_0_2, 44: c_0_3, 45: c_0_4, 46: c_0_5, 47: c_0_6, 48: c_0_7, 49: c_0_8, 50: c_0_9, 52: c_0_11, 5

3: c_0_12, 54: c_0_13, 55: c_0_14, 56: c_0_15, 57: c_0_16, 58: c_0_17, 26: c_1_0, 27: c_1_1, 28: c_1_2, 29: c_1_3, 30: c_1_4, 31: c_1_5, 3

2: c_1_6]}

-> LOGICAL_JOIN {CROSS JOIN, onPredicate = null , Predicate = null}

-> LogicalProjectOperator {projection=[33: c_1_7, 34: c_1_8, 35: c_1_9, 36: c_1_10, 37: c_1_11, 38: c_1_12, 3

9: c_1_13, 40: c_1_14, 26: c_1_0, 27: c_1_1, 28: c_1_2, 29: c_1_3, 30: c_1_4, 31: c_1_5, 32: c_1_6]}

The logicOperatorTree after applying some rules:

[OptimizerTraceUtil.logApplyRule():154] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b45e6] APPLY RULE TF_PUSH_DOWN_PREDICATE_PROJECT 33

Original Expression:

LogicalFilterOperator {predicate=cast(10: c_0_2 as datetime) IS NOT NULL}

-> LogicalProjectOperator {projection=[10: c_0_2, 15: c_0_7]}

-> LogicalFilterOperator {predicate=cast(10: c_0_2 as datetime) IS NOT NULL}

-> LOGICAL_JOIN {CROSS JOIN, onPredicate = null , Predicate = null}

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalAggregation {type=GLOBAL ,aggregations={} ,groupKeys=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalFilterOperator {predicate=true}

-> LogicalProjectOperator {projection=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 18: c_0_10, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17]}

-> LogicalOlapScanOperator {table=14011, selectedPartitionId=null, outputColumns=[8: c_0_0, 9: c_0_1, 10: c_0_2, 11: c_0_3, 12: c_0_4, 13: c_0_5, 14: c_0_6, 15: c_0_7, 16: c_0_8, 17: c_0_9, 18: c_0_10, 19: c_0_11, 20: c_0_12, 21: c_0_13, 22: c_0_14, 23: c_0_15, 24: c_0_16, 25: c_0_17], predicate=null, limit=-1}

-> LogicalLimitOperator {limit=1, offset=0}

The best physical plan:

[OptimizerTraceUtil.log():136] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b

45e6] after extract best plan:

PhysicalHashJoinOperator{joinType=INNER JOIN, joinPredicate=60: cast = 61: cast, limit=-1, predicate=null}

-> PhysicalDistributionOperator {distributionSpec=SHUFFLE_JOIN[61] ,globalDict=[]}

-> PhysicalNestLoopJoinOperator{joinType=CROSS JOIN, joinPredicate=null, limit=-1, predicate=null}

-> PhysicalOlapScanOperator {table=14011, selectedPartitionId=[14006], outputColumns=[10: c_0_2], projection=[10: c_0_2], predica

te=cast(10: c_0_2 as datetime) IS NOT NULL, limit=-1}

-> PhysicalDistributionOperator {distributionSpec=BROADCAST ,globalDict=[]}

-> PhysicalLimitOperator {limit=1, offset=0}

-> PhysicalDistributionOperator {distributionSpec=GATHER ,globalDict=[]}

-> PhysicalNestLoopJoinOperator{joinType=CROSS JOIN, joinPredicate=null, limit=1, predicate=null}

-> PhysicalOlapScanOperator {table=14052, selectedPartitionId=[14043], outputColumns=[], projection=[1], predicate=null, limit=1}

-> PhysicalDistributionOperator {distributionSpec=BROADCAST ,globalDict=[]}

-> PhysicalOlapScanOperator {table=14011, selectedPartitionId=[14006], outputColumns=[], projection=[1], predicate=null, limit=-1}

-> PhysicalDistributionOperator {distributionSpec=SHUFFLE_JOIN[60] ,globalDict=[]}

-> PhysicalOlapScanOperator {table=14154, selectedPartitionId=[], outputColumns=[1: c_2_0, 6: c_2_5], projection=[1: c_2_0, cast(6: c_2_5 as datetime)], predicate=cast(6: c_2_5 as datetime) IS NOT NULL, limit=-1}

每个 rule 的调用次数:

[OptimizerTraceUtil.log():136] [TRACE QUERY e94c1073-a61a-11ed-a188-5254007b45e6] OptimizerTraceInfo

Rules' applied times

{TF_JOIN_ASSOCIATIVITY_INNER 1=1, TF_PARTITION_PRUNE 8=4, TF_PUSH_DOWN_PREDICATE_AGG 28=1, TF_PRUNE_PROJECT 65=8, TF_JOIN_COMMUTATIVITY 3=10, TF_MERGE_PROJECT_WITH_CHILD 98=9, TF_PRUNE_AGG_COLUMNS 50=4, TF_PUSH_DOWN_LIMIT 20=3, IMP_LIMIT 146=1, TF_PUSH_DOWN_PREDICATE_SCAN 27=3, TF_REMOVE_AGGREGATION_BY_AGG_TABLE 92=2, IMP_EQ_JOIN_TO_HASH_JOIN 130=4, TF_MERGE_LIMIT_DIRECT 16=2, TF_PARTITION_PREDICATE_PRUNE 14=4, TF_PRUNE_PROJECT_COLUMNS 48=22, IMP_JOIN_TO_NESTLOOP_JOIN 132=6, TF_MERGE_TWO_FILTERS 42=1, TF_PUSH_DOWN_LIMIT_JOIN 24=1, TF_DISTRIBUTION_PRUNE 9=4, TF_PRUNE_JOIN_COLUMNS 53=6, TF_MERGE_TWO_PROJECT 66=9, TF_EXISTENTIAL_APPLY_TO_JOIN 71=1, TF_PUSH_DOWN_JOIN_CLAUSE 32=1, TF_PRUNE_OLAP_SCAN_COLUMNS 47=8, TF_SPLIT_LIMIT 15=1, TF_PRUNE_GROUP_BY_KEYS 62=4, TF_PUSH_DOWN_PREDICATE_PROJECT 33=6, IMP_OLAP_LSCAN_TO_PSCAN 119=5, TF_PUSH_DOWN_PREDICATE_JOIN 31=1, TF_PUSH_DOWN_JOIN_ON_EXPRESSION_TO_CHILD_PROJECT 99=1}

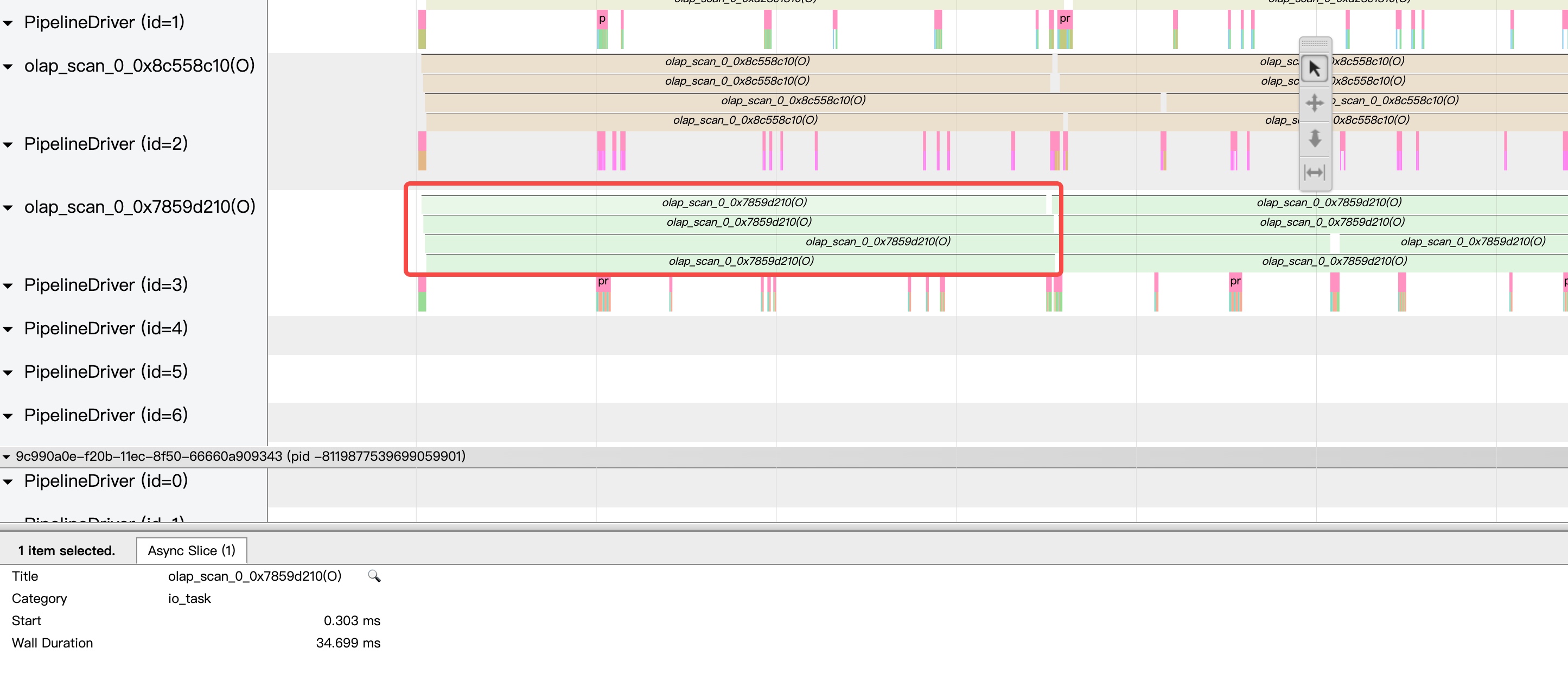

4 StarRocks Execution Trace visualization

功能: 可以可视化整个 SQL 的执行过程, 用来做性能分析

用法: 参考 https://github.com/StarRocks/starrocks/pull/7649,之后我们会补上文档

结果: 如下图所示:

5 StarRocks Query Profile

功能: 包含整个 SQL plan, 调度,执行的关键指标,可以用来做性能分析

用法: 参考 https://docs.starrocks.io/en-us/2.5/administration/query_profile

结果: 如下所示:

- Total: 107ms

- Query Type: Query

- Query State: EOF

- StarRocks Version: UNKNOWN-194073eb0

- User: root

- Default Db: test

- Sql Statement: SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127 WHERE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2

- QueryCpuCost: 2ms

- QueryMemCost: 121.328KB

- Variables: parallel_fragment_exec_instance_num=1,pipeline_dop=0,enable_adaptive_sink_dop=false

- Collect Profile Time: 53ms

Planner:

- Analyzer: 26ms / 1

- CoordDeliverExec: 34ms / 1

- CoordPrepareExec: 2ms / 1

- ExecPlanBuild: 1ms / 1

- Optimizer: 33ms / 1

- Total: 66ms / 1

- Transformer: 1ms / 1

Optimizer:

- CostBaseOptimize: 9ms / 1

- PhysicalRewrite: 0ms / 1

- RuleBaseOptimize: 20ms / 1

Execution Profile e94c1073-a61a-11ed-a188-5254007b45e6:

- ExecutionWallTime: 15.956ms

- OperatorCumulativeTime: 5.200ms

Fragment 0:

- BackendAddresses: 172.26.92.195:9960

- BackendNum: 1

- FragmentInstancePrepareTime: 507.601us

- prepare-fragment-ctx: 50.223us

- prepare-pipeline-driver: 113.621us

- prepare-query-ctx: 28.45us

- prepare-runtime-state: 113.621us

- InstanceNum: 1

- PeakMemoryUsage: 28.81 KB

- QueryMemoryLimit: 136.61 GB

Pipeline (id=0):

6 StarRocks Query Dump

功能: 将 SQL 执行时的整个集群,建表,统计信息等信息 一键 dump 出来,可以用来复现追查用户问题

用法:参考 https://docs.starrocks.io/en-us/latest/faq/Dump_query#querydump-interface

7 StarRocks SQL Audit Log

For every query, you could find the audit log in log/fe.audit.log file

功能:记录每个查询的 ScanRows,ScanBytes,CPU 消耗,内存消耗

用法:log/fe.audit.log

结果:如下所示

2023-02-06 20:54:44,550 [query] |Client=172.26.92.195:60684|User=root|AuthorizedUser='root'@'%'|ResourceGroup=default_wg|Catalog=default_catalog|Db=test|State=EOF|ErrorCode=|Time=165|ScanBytes=0|ScanRows=0|ReturnRows=0|CpuCostNs=1765622|MemCostBytes=327328|StmtId=34|QueryId=6dbf01e8-a61d-11ed-a188-5254007b45e6|IsQuery=true|feIp=192.168.122.1|Stmt=SELECT t2_126.c_2_0 FROM t2 AS t2_126 INNER JOIN (SELECT v0_127.c_0_2, v0_127.c_0_7 FROM v0 AS v0_127 WHERE EXISTS (SELECT v0_127.c_0_7 FROM t1 AS t1_125, v0 AS v0_127) ) subv0 ON t2_126.c_2_5 = subv0.c_0_2|Digest=|PlanCpuCost=6.0|PlanMemCost=2.0

8 StarRocks Runtime Query metrics

功能:统计每个 BE 节点的关键查询指标

用法:curl -u root:** IP:8040/metrics | grep -E "^star.pip_"

结果:如下所示

starrocks_be_pip_exe_driver_queue_len 0

starrocks_be_pip_exe_poller_block_queue_len 0

starrocks_be_pip_query_ctx_cnt 2

starrocks_be_wg_pip_exe_driver_queue_len 0

starrocks_be_wg_pip_exe_poller_block_queue_len 0

9 StarRocks Big Query

show proc '/current_queries'

show proc '/current_queries/${query_id}/hosts'

StarRocks 的慢查询会收集在 $log/slow_query.log 中。

10 StarRocks information schema table

StarRocks 在持续加强和晚上 information schema 的table信息,除了常规的 tablet schema 信息外,我们还加入以下增加可观测性的表:

be_compactions 表

可以通过 SQL 直接查询每个 BE 上 compaction 的关键信息

CREATE TABLE `be_compactions` (

`BE_ID` bigint(20) NULL COMMENT "",

`CANDIDATES_NUM` bigint(20) NULL COMMENT "",

`BASE_COMPACTION_CONCURRENCY` bigint(20) NULL COMMENT "",

`CUMULATIVE_COMPACTION_CONCURRENCY` bigint(20) NULL COMMENT "",

`LATEST_COMPACTION_SCORE` double NULL COMMENT "",

`CANDIDATE_MAX_SCORE` double NULL COMMENT "",

`MANUAL_COMPACTION_CONCURRENCY` bigint(20) NULL COMMENT "",

`MANUAL_COMPACTION_CANDIDATES_NUM` bigint(20) NULL COMMENT ""

) ENGINE=SCHEMA ;

be_configs 表

可以通过 SQL 直接查询每个 BE 上的配置信息:

mysql> select * from be_configs limit 2;

+-------+----------------------------------------+-------+

| BE_ID | NAME | VALUE |

+-------+----------------------------------------+-------+

| 10002 | alter_tablet_worker_count | 3 |

| 10002 | auto_adjust_pagecache_interval_seconds | 10 |

+-------+----------------------------------------+-------+

2 rows in set (0.02 sec)

be_logs 表

可以通过 SQL 直接查询每个 BE 上相关事务的日志信息:

CREATE TABLE `be_logs` (

`BE_ID` bigint(20) NULL COMMENT "",

`LEVEL` varchar(2048) NULL COMMENT "",

`TIMESTAMP` bigint(20) NULL COMMENT "",

`TID` bigint(20) NULL COMMENT "",

`LOG` varchar(2048) NULL COMMENT ""

) ENGINE=SCHEMA ;

mysql> select * from be_logs limit 1;

+-------+-------+------------+--------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| BE_ID | LEVEL | TIMESTAMP | TID | LOG |

+-------+-------+------------+--------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| 10002 | I | 1685401899 | 214764 | I0530 07:11:39.266544 214764 daemon.cpp:201] Current memory statistics: process(17415544), query_pool(6080), load(0), metadata(1285032), compaction(0), schema_change(0), column_pool(0), page_cache(0), update(0), chunk_allocator(0), clone(0), consistency(0) |

+-------+-------+------------+--------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (4.00 sec)

be_metrics 表

可以通过 SQL 直接查询每个 BE 上的指标信息:

mysql> select * from be_metrics limit 20;

+-------+-----------------------------------+--------+-------+

| BE_ID | NAME | LABELS | VALUE |

+-------+-----------------------------------+--------+-------+

| 10002 | active_scan_context_count | | 0 |

| 10002 | binary_column_pool_bytes | | 0 |

| 10002 | bitmap_index_mem_bytes | | 0 |

| 10002 | blocks_created_total | | 0 |

| 10002 | blocks_deleted_total | | 0 |

| 10002 | blocks_open_reading | | 0 |

| 10002 | blocks_open_writing | | 0 |

| 10002 | bloom_filter_index_mem_bytes | | 0 |

| 10002 | broker_count | | 0 |

| 10002 | brpc_endpoint_stub_count | | 1 |

| 10002 | bytes_read_total | | 0 |

| 10002 | bytes_written_total | | 0 |

| 10002 | central_column_pool_bytes | | 0 |

| 10002 | chunk_allocator_mem_bytes | | 0 |

| 10002 | chunk_pool_local_core_alloc_count | | 0 |

| 10002 | chunk_pool_other_core_alloc_count | | 0 |

| 10002 | chunk_pool_system_alloc_cost_ns | | 0 |

| 10002 | chunk_pool_system_alloc_count | | 0 |

| 10002 | chunk_pool_system_free_cost_ns | | 0 |

| 10002 | chunk_pool_system_free_count | | 0 |

+-------+-----------------------------------+--------+-------+

20 rows in set (0.02 sec)

be_txns 表

可以通过 SQL 直接查询每个 BE 上每个导入事务的关键时间信息和统计信息

CREATE TABLE `be_txns` (

`BE_ID` bigint(20) NULL COMMENT "",

`LOAD_ID` varchar(2048) NULL COMMENT "",

`TXN_ID` bigint(20) NULL COMMENT "",

`PARTITION_ID` bigint(20) NULL COMMENT "",

`TABLET_ID` bigint(20) NULL COMMENT "",

`CREATE_TIME` bigint(20) NULL COMMENT "",

`COMMIT_TIME` bigint(20) NULL COMMENT "",

`PUBLISH_TIME` bigint(20) NULL COMMENT "",

`ROWSET_ID` varchar(2048) NULL COMMENT "",

`NUM_SEGMENT` bigint(20) NULL COMMENT "",

`NUM_DELFILE` bigint(20) NULL COMMENT "",

`NUM_ROW` bigint(20) NULL COMMENT "",

`DATA_SIZE` bigint(20) NULL COMMENT "",

`VERSION` bigint(20) NULL COMMENT ""

) ENGINE=SCHEMA ;

fe_tablet_schedules 表

可以通过 SQL 直接查询 starrocks 集群 tablet 调度和 balance的状态

CREATE TABLE `fe_tablet_schedules` (

`TABLE_ID` bigint(20) NULL COMMENT "",

`PARTITION_ID` bigint(20) NULL COMMENT "",

`TABLET_ID` bigint(20) NULL COMMENT "",

`TYPE` varchar(2048) NULL COMMENT "",

`PRIORITY` varchar(2048) NULL COMMENT "",

`STATE` varchar(2048) NULL COMMENT "",

`TABLET_STATUS` varchar(2048) NULL COMMENT "",

`CREATE_TIME` double NULL COMMENT "",

`SCHEDULE_TIME` double NULL COMMENT "",

`FINISH_TIME` double NULL COMMENT "",

`CLONE_SRC` bigint(20) NULL COMMENT "",

`CLONE_DEST` bigint(20) NULL COMMENT "",

`CLONE_BYTES` bigint(20) NULL COMMENT "",

`CLONE_DURATION` double NULL COMMENT "",

`MSG` varchar(2048) NULL COMMENT ""

) ENGINE=SCHEMA ;

load_tracking_logs 表

可以查看 load 的 tracking_logs

CREATE TABLE `load_tracking_logs` (

`JOB_ID` bigint(20) NULL COMMENT "",

`LABEL` varchar(2048) NULL COMMENT "",

`DATABASE_NAME` varchar(2048) NULL COMMENT "",

`TRACKING_LOG` varchar(65535) NULL COMMENT ""

) ENGINE=SCHEMA ;

11 StarRocks 统计信息表

可以通过 column_statistics 查看列的基础统计信息

CREATE TABLE `column_statistics` (

`table_id` bigint(20) NOT NULL COMMENT "",

`partition_id` bigint(20) NOT NULL COMMENT "",

`column_name` varchar(65530) NOT NULL COMMENT "",

`db_id` bigint(20) NOT NULL COMMENT "",

`table_name` varchar(65530) NOT NULL COMMENT "",

`partition_name` varchar(65530) NOT NULL COMMENT "",

`row_count` bigint(20) NOT NULL COMMENT "",

`data_size` bigint(20) NOT NULL COMMENT "",

`ndv` hll NOT NULL COMMENT "",

`null_count` bigint(20) NOT NULL COMMENT "",

`max` varchar(65530) NOT NULL COMMENT "",

`min` varchar(65530) NOT NULL COMMENT "",

`update_time` datetime NOT NULL COMMENT ""

) ENGINE=OLAP

PRIMARY KEY(`table_id`, `partition_id`, `column_name`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`table_id`, `partition_id`, `column_name`) BUCKETS 10

PROPERTIES (

"replication_num" = "1",

"in_memory" = "false",

"storage_format" = "DEFAULT",

"enable_persistent_index" = "false",

"replicated_storage" = "true",

"compression" = "LZ4"

);

可以通过 column_statistics 查看列的直方图统计信息

CREATE TABLE `histogram_statistics` (

`table_id` bigint(20) NOT NULL COMMENT "",

`column_name` varchar(65530) NOT NULL COMMENT "",

`db_id` bigint(20) NOT NULL COMMENT "",

`table_name` varchar(65530) NOT NULL COMMENT "",

`buckets` varchar(65530) NULL COMMENT "",

`mcv` varchar(65530) NULL COMMENT "",

`update_time` datetime NOT NULL COMMENT ""

) ENGINE=OLAP

PRIMARY KEY(`table_id`, `column_name`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`table_id`, `column_name`) BUCKETS 10

PROPERTIES (

"replication_num" = "1",

"in_memory" = "false",

"storage_format" = "DEFAULT",

"enable_persistent_index" = "false",

"replicated_storage" = "true",

"compression" = "LZ4"

);

12 FE 动态执行脚本

StarRocks 支持通过 SQL 命令行在 FE 执行 groovy 脚本,需要 admin 权限,这样获取 FE 进程的运行时信息就很方便,下面是个 获取 JVM 内存信息 和 获取线程堆栈的示例:

mysql> admin execute on frontend '

import com.starrocks.monitor.jvm.JvmInfo;

out.append("${JvmInfo.getMemoryStats()}")

';

Query OK, 0 rows affected (0.02 sec)

Pool: Code Cache

- Initial: 2555904

- Used: 15780288

- Committed: 16121856

- Maximum: 251658240

Pool: Metaspace

- Initial: 0

- Used: 62422232

- Committed: 63815680

- Maximum: -1

Pool: Compressed Class Space

- Initial: 0

- Used: 7503856

- Committed: 7856128

- Maximum: 1073741824

Pool: Par Eden Space

- Initial: 280756224

- Used: 253276208

- Committed: 280756224

- Maximum: 1145372672

Pool: Par Survivor Space

- Initial: 35061760

- Used: 35061752

- Committed: 35061760

- Maximum: 143130624

Pool: CMS Old Gen

- Initial: 701890560

- Used: 12659464

- Committed: 701890560

- Maximum: 2863333376

mysql> admin execute on frontend '

import com.starrocks.monitor.jvm.JvmInfo;

out.append("${JvmInfo.getThreadDumpWithPattern(\"PUBLISH\")}")

';

Query OK, 0 rows affected (0.05 sec)

"PUBLISH_VERSION" Id=29 TIMED_WAITING

at java.lang.Thread.sleep(Native Method)

at com.starrocks.common.util.Daemon.run(Daemon.java:121)

13 BE 动态执行脚本

StarRocks 支持通过 SQL 命令行在 BE 执行 wren 脚本,需要 admin 权限,这样获取 BE 进程的运行时信息就很方便,下面是个获取 tablet 元数据的示例:

mysql> admin execute on 10004 '

'> var ts = StorageEngine.get_tablet_infos(-1,-1)

'> for (t in ts) {

'> System.print("%(t.tablet_id) %(t.data_size)")

'> }

'> ';

Query OK, 0 rows affected (0.01 sec)

10009 0

10011 0

10013 0

...

mysql> admin execute on 10004 '

'> var t = StorageEngine.get_tablet(11005)

'> System.print("info: %(t.updates().toPB().toString())")

'> ';

Query OK, 0 rows affected (0.00 sec)

info: {"versions":[{"version":{"major":"3","minor":"1"},"creationTime":"1679897711","rowsets":[2],"compaction":{"startVersion":{"major":"3","minor":"0"},"inputs":[0,1],"outputs":[2]}},{"version":{"major":"4","minor":"0"},"creationTime":"1679900665","rowsets":[2,3],"deltas":[3]},{"version":{"major":"5","minor":"0"},"creationTime":"1679900666","rowsets":[2,3,4],"deltas":[4]},{"version":{"major":"6","minor":"0"},"creationTime":"1679900667","rowsets":[2,3,4,5],"deltas":[5]},{"version":{"major":"7","minor":"0"},"creationTime":"1679900667","rowsets":[2,3,4,5,6],"deltas":[6]},{"version":{"major":"8","minor":"0"},"creationTime":"1679900667","rowsets":[2,3,4,5,6,7],"deltas":[7]},{"version":{"major":"9","minor":"0"},"creationTime":"1679900667","rowsets":[2,3,4,5,6,7,8],"deltas":[8]},{"version":{"major":"10","minor":"0"},"creationTime":"1679900668","rowsets":[2,3,4,5,6,7,8,9],"deltas":[9]},{"version":{"major":"11","minor":"0"},"creationTime":"1679900668","rowsets":[2,3,4,5,6,7,8,9,10],"deltas":[10]},{"version":{"major":"12","minor":"0"},"creationTime":"1679900668","rowsets":[2,3,4,5,6,7,8,9,10,11],"deltas":[11]},{"version":{"major":"13","minor":"0"},"creationTime":"1679900668","rowsets":[2,3,4,5,6,7,8,9,10,11,12],"deltas":[12]},{"version":{"major":"13","minor":"1"},"creationTime":"1679900708","rowsets":[13],"compaction":{"startVersion":{"major":"13","minor":"0"},"inputs":[2,3,4,5,6,7,8,9,10,11,12],"outputs":[13]}}],"applyVersion":{"major":"13","minor":"1"},"nextRowsetId":14,"nextLogId":"28"}

mysql> admin execute on 10004 '

'> for (dir in StorageEngine.get_data_dirs()) {

'> System.print("dir: %(dir.path()) rocksdb: %(dir.get_meta().sst_file_size())")

'> var result = dir.get_meta().compact().toString()

'> System.print("compact %(result) size: %(dir.get_meta().sst_file_size())")

'> }

'> ';

Query OK, 0 rows affected (0.00 sec)

dir: /home/decster/projects/starrocks/localrun/be/storage rocksdb: 156484

compact OK size: 12708

TalkCody 欢迎 Star&共建

欢迎关注微信公众号